Overview

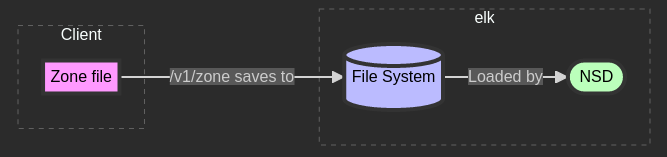

In this article, I go over my development notes to serve a non-recursive authoritative DNS server. This details my plans of a public API to upload a zone file to a public namespace server for an origin/domain the user owns.

Outline:

What’s a domain name system server (DNS server)?

Maps a domain like

elk.ggto an internet protocol address (IP address).

Previous Experiences

I’ve been running an authoritative DNS server at ns1.elk.gg for some time for 1 other domain of mine using NSD. For the fun of it, I was setup dnsmasq DNS server in a k3s (kubernetes) cluster. After performance testing of it, I wasn’t too happy with the results, 37,000 queries / second, 2 ms latency from local to local. NSD (namespace server daemon) with default config from nix on the same virtual machine can handle around 87,000 queries / second, 0.83 ms latency from local to local. For reference the following is my dnsperf test

[nix-shell:~/temp]$ dnsperf -s localhost -d custom_domains.txt -c 10 -l 30

<output omitted>

[j@elk:~/temp]$ command cat custom_domains.txt

example.com AIs this simple performance testing adequate? Is dnsmasq just that much slower than NSD? Would it more less to maintain if I lean into kubernetes selling point, high availability and scalability to add nodes to the network? How does the performance look if we request from local to remote production server running our DNS server?

I’m not answering these questions, just rhetorical reflections for now.

There was a lot of tweaking required to get a DNS server as a service running within a container within a k3s namespace. dnsmasq was just the most straightforward to configure and get working.

The goal right now is to serve specific domains that want to use ns1.elk.gg as their DNS, and not to recursively query other DNS’s to resolve any domain.

What’s an authoritative domain name system server (DNS server)?

An authoritative DNS server is responsible for providing definitive answers to DNS queries about domain names within its zone of authority.

- Stores the actual DNS records for a specific domain or set of domains.

- Provides authoritative responses directly to recursive DNS servers or to users’ devices.

- Is the final source of truth for the DNS information of its designated domains.

Other types of DNS servers, like root servers and top level domain (TLD) servers, are also authoritative for their respective zones. They work together in a hierarchical system to resolve domain names across the internet.

Dev Log

NSD (namespace server daemon)

By default, our NSD config sets chroot which restricts what NSD (nsd:nsd)

can see in the file system

so chroot /var/lib/nsd means it can’t see anything outside of the directory.

Setup for nsd-control (remote-control, but I only need it on localhost):

systemd service to: create the /etc/nsd dir and to run nsd-control-setup which creates keys

to enable remote-control to enable use of nsd-control to allow use to add zone files at runtime.

Setup for ad-hoc pattern:

we need to append some config to our existing nsd.conf. nix options don’t support the patterns option, but

thankfully it works smoothly using services.nsd.extraConfig. so under patterns, we define our pattern name

and its zone file directory containing zone files. we use a wildcard to allow it to treat all files as zone files

in the directory. ad-hoc is the programmer-defined variable name I gave to my pattern associated with the zone file dir.

What’s a zone file / DNS records?

Domain-owner defined records mapping a domain or subdomain to an IPv4 or IPv6 address setting timeouts too. Each record in the file can do more than just map domain to IP. There’s record types which signify something else entirely for the domain such as start of authority (SOA) for declaring who is your primary DNS server, MX for configuring a mail server at the DNS level, text (TXT) records for setting any value. A minimal example would be the following.

$ORIGIN example.com.

@ IN SOA ns1.elk.gg. postmaster.example.com. (

2019268754 ; Serial

3600 ; Refresh

1800 ; Retry

604800 ; Expire

86400 ) ; Minimum TTL

IN NS ns1.elk.gg.

IN NS ns2.elk.gg.

IN NS ns3.elk.gg.

IN NS ns4.elk.gg.

IN A 10.0.1.102Throughout the step-by-step custom setup, we are running as root to manually put things together and make sure they

work as expected with my desired configuration, but chown or switch user to nsd:nsd as needed for our nix build and

systemd services running nsd.

Note that the nixpkgs nix module for services.nsd creates this user & group, nsd:nsd. We then later define my imperative

steps as declarative steps in my nix module, namespace-server which wraps nsd service.

Depending on throughput of API & open connections, we could hit open file descriptor limit for a process. We increase this limit by configuring our systemd service for the API with

serviceConfig = {

LimitNOFILE = 65535;

};Though we later rate limit our API to 100 requests/second and set 6 workers for the http server. Our open file count for the process will be well below this OS constraint.

My API was simple enough I could live with a push from nss repo then

nix flake lock --update-input namespace-server && rebuild-switch

for each change to my API for dev work and testing, otherwise I would parameterize the port in applicable tests to then

run the python flask server directly on port 8000 testing as I go with --debug too

Alternatively, I can source the input in my system flake from a local file (module or flake.nix),

but there’s some quirks to that.

Why Nix?

I’ve been actively using nixos between at least 3 computers/virtual machines over the last year. Once you paint something with nix, it’s encouraging to paint it with more nix. Though, it is still compatible with deploying things like containers imperatively through rsync or an ansible playbook, and setting up dev shells which help link libraries to run arbitrary software.

I’m building upon my existing infrastructure which mostly uses nixos for system builds, application configs, etc for dev work, I just run things directly as much as I can creating dev shells, containers, virtual machines when needed as nixos can get in the way of just imperatively running arbitrary software easily.

The applied self.inputs.namespace-server.nixosModules.nss is analogous to docker compose but with several improvements.

I won’t go into the comparisons here, but more on that in Matthew Croughan’s Talk.

NixOS Package Flake

Building nix derivations, modules, and flake for: nss, namespace-server, and namespace-server-api

- nss bundles everything

- namespace-server wraps nixpkgs nix module, NSD configuring to my needs

- namespace-server-api enables users to add their zone file for their domain to my namespace server, ns1.elk.gg, etc

$ nix flake show

git+file:///home/j/projects/namespace_server

├───api-port: unknown

├───nixosModules

│ ├───default: NixOS module

│ ├───namespace-server: NixOS module

│ ├───namespace-server-api: NixOS module

│ └───nss: NixOS module

└───packages

└───x86_64-linux

└───namespace-server-api-pkg: package 'python3-3.12.4-env'Feeds into my workflow nicely running nixos on dev machine and nixos in my primary virtual machine for elk.gg.

poetry2nix and

poetry are relatively new to me, but they provided pretty smooth experiences improved upon

from python setup.py and nixpkgs mkDerivation for packaging and building

One gotcha from poetry2nix was I need to access dependencyEnv from what mkPoetryApplication returns as gunicorn dep

was a runtime dep and not just a library for my python module.

In my flake.nix output func body attr set declaration,

inherit (poetry2nix.lib.mkPoetry2Nix { inherit pkgs; }) mkPoetryApplication;

namespace-server-api-pkg = (mkPoetryApplication { projectDir = ./.; }).dependencyEnv;where my repo file structure simplified view is

├── flake.lock

├── flake.nix

├── namespace_server

│ ├── api.py

│ ├── registrar.py

│ └── zone.pyTesting Plans

Testing includes:

- smoke tests of api (bash running curl + dig and later bruno (postman alternative))

- unit tests for all use cases of my python module

- load tests of API

- end-to-end (e2e) tests of my nss (namespace server) from local to local

This may feel like overkill for this simple of a project, but it’s best practice to make sure there’s no regression and incorporate test-driven development.

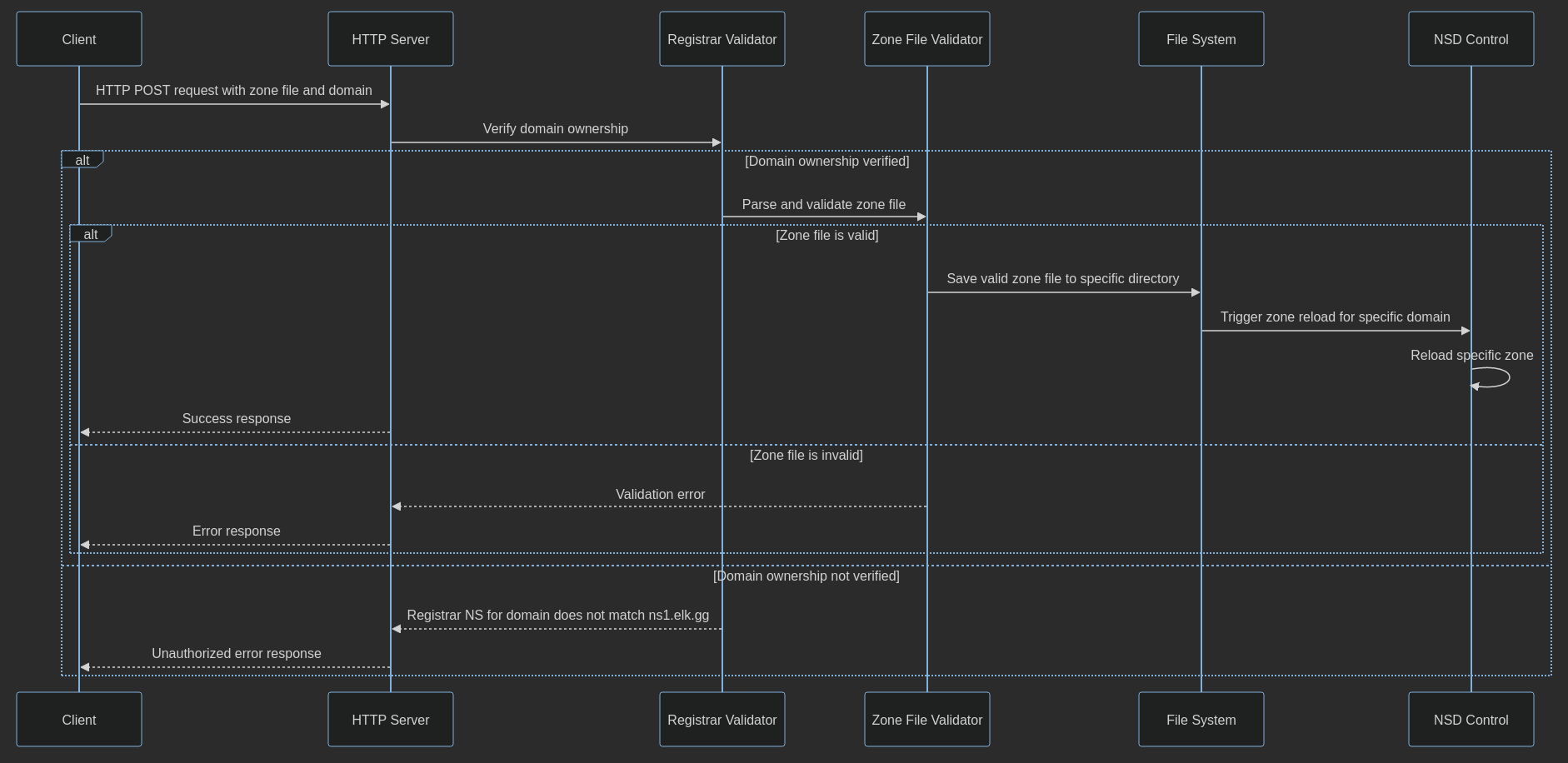

User Input Validation

The following is my initial idea of things to verify.

request content validation:

- does the user own the domain for which they’re adding a zone file?

- can I parse the zone file without an error?

http considerations:

- rate limit requests (limit to 100 requests / second)

- load balancing

- http logging (who requested what)

Smoke Testing

This is intuitive enough to define and run through bash. send request, check for non-0 exit code, send DNS query, check for non-0 exit code

Unit Testing

self-proxying for testing api.py

if os.environ.get('ENVIRONMENT') == 'testing':

log.info('testing enabled')

def validate_registrar_nss(*args, **kwargs):

pass

else:

from namespace_server.registrar import validate_registrar_nssThe validate_registrar_nss will raise an exception if its checks fail. You may think to use patch instead, but this

proxying at this level was easier to do here for load-test.sh as I generate random domains

to feed into a template to request a variety of domains for

setting their zone file.

unittest.mock and pytest with an example of a test

@patch('namespace_server.api.validate_ns_records')

def test_no_ns_records(mock_validate_ns, client):

mock_validate_ns.side_effect = NoNSRecordsError("no NS records")

response = client.post('/v1/zone?domain=example.com', data=b'zone data')

assert response.status_code == 400

assert response.data.decode('utf-8') == "no NS records"where the side_effect is an instance of exception I define

Load Testing

Using vegeta, a http load testing tool, to test my API,

I was getting the error dial tcp 0.0.0.0:0->[::1]:12601 which made me realize

I need to explicitly bind to ipv6 localhost too, so I add --bind [::1]:12601 to my gunicorn command to run my flask server

which has built up to

script = ''

${namespace-server-api-pkg}/bin/gunicorn namespace_server.api:app \

--bind 127.0.0.1:${toString port} \

--bind [::1]:${toString port} \

--timeout 30 \

--workers 8 \

--keep-alive 10

'';this code snippet is part of my systemd service config defined in my nix module for my API

End-to-End Testing

Because nix and nixos is being used to bundle all the requirements in a declarative, reproducible build, we can simply import my flake into my system flake and go about testing.

we add my nss flake sourced from a private github repo of mine as an input then import it like so

nixosConfigurations = {

desktop = nixpkgs.lib.nixosSystem {

modules = [

self.inputs.namespace-server.nixosModules.nss # testingthen we can smoke test or load test as needed.

Future Work

The following are features I’d like to add as of the initial publishing of this post:

- actually make this available to others when it’s ready

- deletion of zone file for which the user owns

- telemetrics of API users

- automated performance measurements

- distributed nss across geographical areas (horiz scaling)

- testing on more powerful machine (vertical scaling)

- measuring cost of API and DNS queries

- served domain moderation and policy enforcement (email verification, terms of service)